core guidelines

AI Design

Design and build AI-powered features for Watermark products with confidence. The following curated list of AI Design Guidelines will help you design transparent, ethical, and user-centered AI experiences.

Guidelines

Set Clear Expectations

If users don't understand what an AI feature does (or assume it's smarter than it is) they may lose trust when the result isn't what they expected. Setting clear expectations helps prevent confusion, especially for users who rely on predictable system behavior.

Best Practices

- Briefly describe the purpose and outcome of the AI interaction in plain language near actions powered by AI

- Use Ripple's AI Communication patterns, including labels and helper text, to communicate when a feature is AI-assisted or in beta

- Set up expectations about accuracy, uncertainty, or required human review and judgement

- Label the AI output with a confidence status indicator when designing for predictive AI to communicate the system's level of certainty

- Don't assume users understand what AI is doing behind the scenes

- Don't hide limitations or overpromise precision

- Don't rely only on tooltips—make key expectations visible at a glance

Show the Value, Not the Magic

Most users don't care (or need to know) about the underlying technological layers that are powered by machine learning or natural language processing. They care about what it helps them accomplish. Prioritizing and communicating value builds trust and keeps content accessible to most levels of technical comfort.

Best Practices

- Use the phrase "powered by AI" for all areas generated by AI

- Keep headings, labels, and buttons action-oriented using verbs that match user goals, such as "Review patterns," "Explore categories," "Analyze comments"

-

Keep technical terms in the background unless directly relevant to decision-making

If model transparency or additional technical specifications is important for the user to understand, avoid embedding it in the core UI, and link to a "Learn more" interaction pattern or resource

- Don't lead with buzzwords like "AI-powered," "smart," or "automated" without additional context about the value or user goals

- Don't explain the technology before explaining the benefit

- Don't assume that transparency requires technical depth

Make Data Use Transparent

Users deserve to know how their data is powering AI features. Transparency fosters consent, builds trust, and helps meet legal and ethical obligations around privacy.

Best Practices

-

Clearly state what inputs the AI uses and where they come from

For example, "Based on your past assessment plans…," or "This summary is based on responses collected from your recent course evaluations."

- Use the recommended AI Communication patterns to ensure language meets compliance

- Provide opt-in or opt-out settings to allow users to control their consent for AI features in product settings

- Don't imply that AI outputs are fully private if data is processed externally

- Don't use vague phrases like "smart suggestions" without context

Explain Just Enough

AI features can be confusing if users don't understand why a suggestion appeared or how it was generated. But overexplaining can be just as harmful since it clutters the interface and adds cognitive load.

Best Practices

- Offer short, plain-language explanations near the AI action or functionality

- Use phrases like "Based on your recent entries…" or "Suggested using past feedback"

- Show where the insight or recommendation came from when it's relevant to the user's trust

- Don't try to explain the algorithm or model unless legally or ethically required

- Don't rely on technical terms like "classification," "embedding," or "semantic similarity"

- Don't hide explanations behind obscure icons or require multiple clicks to find context

Encourage Exploration Safely

AI features are often new, unfamiliar, or unpredictable. If users are afraid to try them because they worry they'll lose work or make a mistake, they are less likely to engage. Making AI features safe to explore builds trust and confidence, especially for users who are skeptical or less technically confident.

Best Practices

- Let users preview results before committing to changes

- Make it easy to undo or revert AI-generated content

- Use soft, invitational language like "Try it" or "Preview suggestion"

- Use non-destructive patterns like side-by-side previews or drafts

- Reinforce that the user stays in control (e.g. "You can review and edit this before saving.")

- Don't apply changes automatically without the user's permission

- Don't assume users will trust the system on the first try

- Don't make recovery paths (like Undo or Edit) hard to find or require extra steps

Give Control When AI Fails

Even the best AI systems will occasionally fall short. Whether it's due to limited data, unclear input, or model failure, users need a clear way to take over. If we don't support graceful failure, users lose confidence and may abandon the feature entirely.

Best Practices

-

Let users edit, retry, or bypass a failed output

Offer users a simple path to correct or override the result.

- Offer manual options where possible (e.g. "Write your own," "Start from scratch," "Try again")

-

Use clear, calm language to explain what went wrong

Avoid blame and jargon when describing the error.

- Provide fallback states with clear actions (e.g. "We couldn't generate a summary this time. You can write your own below or try again.")

- Use Ripple's Empty States or System Communication patterns to guide recovery

- Don't leave users stuck with no path forward if an output fails

- Don't expose technical error messages that users can't act on

- Don't reset or delete user input without a clear warning

Invite Feedback at the Right Time

We can iterate on the design and function of the AI systems we design, but only when we know something didn't work as expected or meet user expectations. Allowing users to provide feedback will help them feel heard when something goes wrong in the product. However, if the feedback loop is too frequent, complex, or soon, users won't engage meaningfully. Timing and simplicity is key.

Best Practices

-

Offer a feedback prompt that aligns with Watermark's evaluation tools

Evaluation and observability tools, which are designed to experiment with, evaluate, and troubleshoot AI and LLM applications, may vary by product.

-

Add lightweight feedback controls near the AI-powered feature

Feedback mechanisms should be subtle and thoughtfully integrated in a workflow.

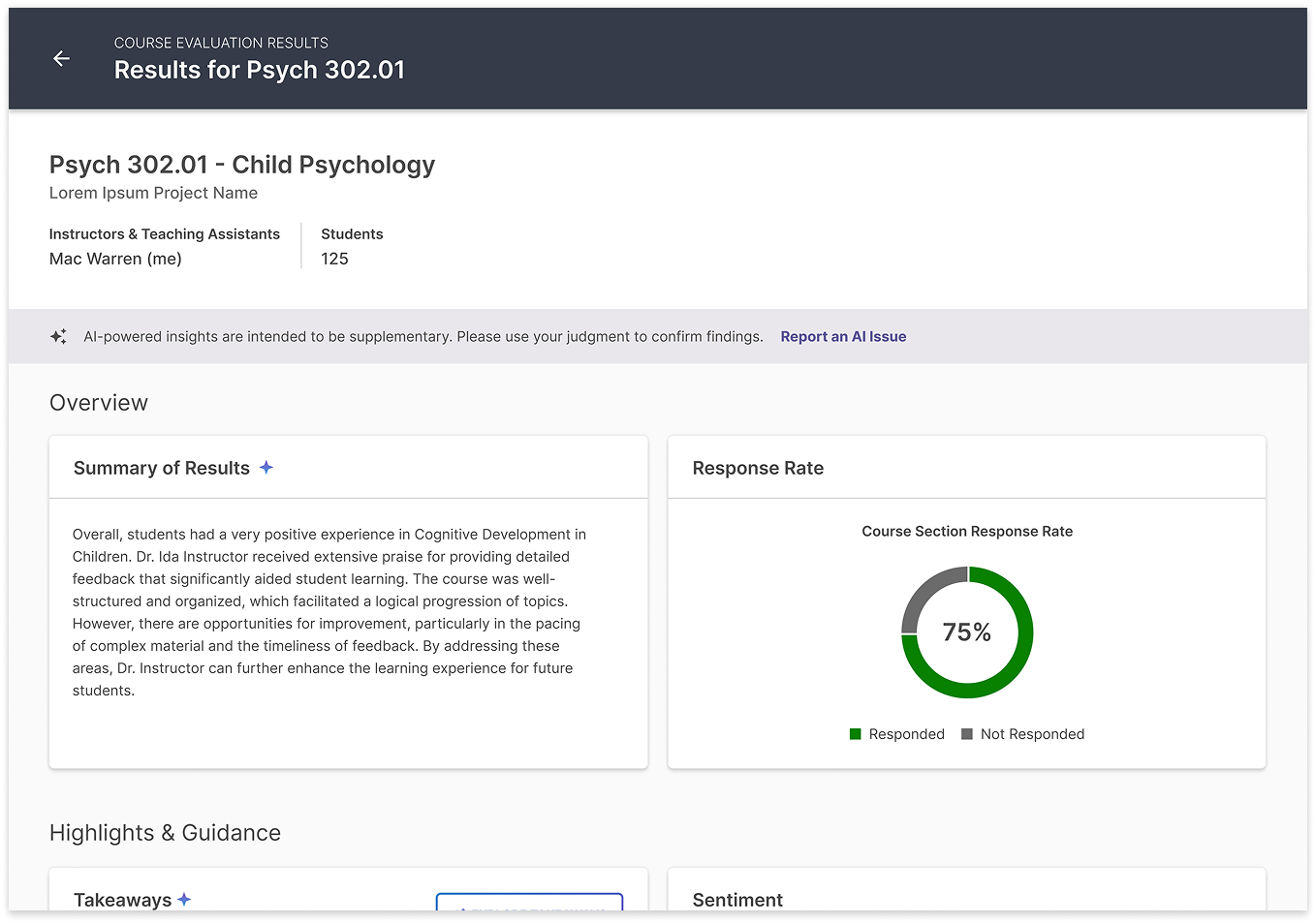

- Use neutral, open language (e.g. "Report an AI issue.")

- Make feedback optional and easy to skip

- Don't ask multiple open-ended questions without offering a quick alternative

- Don't design feedback forms that require multiple pages to complete

- Don't interrupt a user's workflow to ask for feedback about the AI result

Glossary

This glossary defines key terms related to Artificial Intelligence (AI) and Machine Learning (ML). These definitions are for product development users to understand concepts used within Watermark products.

| Term | Definition |

|---|---|

| Artificial Intelligence (AI) | The simulation of human intelligence processes by machines. These processes include learning (acquiring information and rules for using it), reasoning (using rules to reach conclusions), and self-correction. |

| Machine Learning (ML) | A subset of AI that enables systems to learn from data, identify patterns, and make decisions with minimal human intervention. |

| Deep Learning | A subfield of machine learning that uses artificial neural networks with multiple layers (deep neural networks) to learn complex patterns from data. |

| Generative AI | AI models that create new content, such as text, images, audio, or code, that is similar to human-created content. |

| Large Language Model (LLM) | A type of AI model trained on a massive amount of text data to understand and generate human-like language. |

| Natural Language Processing (NLP) | A field of AI that focuses on enabling computers to understand, interpret, and generate human language. |

| Prompt Engineering | The process of designing and refining inputs (prompts) to guide AI models, especially LLMs, to generate desired outputs. |

| Training Data | The dataset used to train an AI or ML model. |

| Model | The output of an ML algorithm applied to data. It represents what the ML system has learned from the training data. |

| Bias | A systematic error in an AI model's output that can lead to unfair or inaccurate results. Bias often stems from biases present in the training data. |

| Hallucination (AI) | When a generative AI model produces factually incorrect or nonsensical, despite appearing coherent or confident. |

| User Feedback Loop | A system for collecting and incorporating user input to improve AI model performance and user experience. |

| Latency | The delay between an input to an AI system and its corresponding output. |

| Monitoring (AI) | Continuously tracking the performance, behavior, and potential issues of deployed AI models. |

| Fine-tuning | Adjusting a pre-trained AI model on a smaller, specific dataset to adapt it to a particular task or domain. Note: Watermark does not currently create custom generative AI models, so this practice is not applicable. |

| Responsible AI | A set of practices and principles for developing and deploying AI systems in a way that is ethical, fair, transparent, and accountable. |

| Guardrails (AI) | Constraints or rules implemented in an AI system to prevent undesirable or harmful outputs. |

| Controllability | The design of AI systems that allow users to intervene, override, or refine outputs when necessary. |

| Scalability | The ability of an AI system to handle increasing amounts of data and users without compromising performance. |

| Ethical AI | Considering the moral implications and societal impact of AI systems throughout their lifecycle. |